Go talk to the LLM

Heyo! These so called "AI" is everywhere these days. This blog is to show all the ways I personally make use of these things. I've been meaning to write this blog for quite some time as I've heard a lot of people saying something along the lines of "I kinda get how this work, but I don't know what to use it for".

I'm no expert by any means, but I've been playing around with them for quite some time. I don't know when exactly I started down this rabbit hole, but it should be somewhere in late 2020 or early 2021. This is going to be a list of the different ways I use LLMs in no particular order. Hopefully you'll find a few good ideas that you too could adapt. I've split them into indirect and direct usage. Indirect ones are tools/products that use LLMs under the hood and direct uses are where I'm directly interacting with the LLM models/APIs.

Indirect Usage #

Here is a list of all the different tools that I use on a daily basis that make use of LLMs under the hood. I'm not going to go into the details of how they work, but I'll give a brief overview of what they do and how I use them.

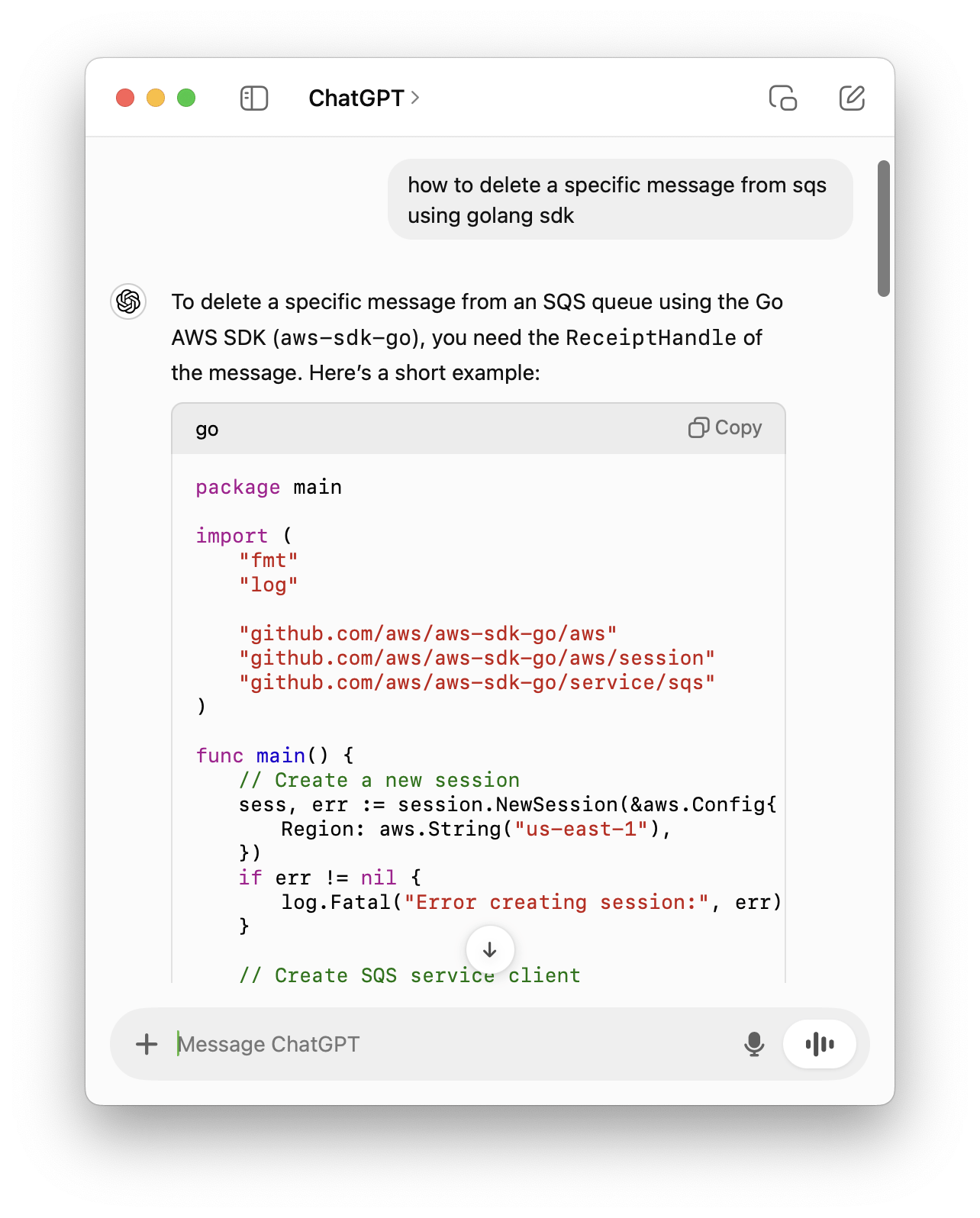

Asking questions via ChatGPT #

I mean, everyone knows about ChatGPT. I still remember when my dad asked me about it and even said that he has been using it for some time. It is crazy how it has become such a household name. I used to ask it to write a lot of code earlier, but these days, I primarily use it for "how do I do that" or "what is that" kind of questions where I'm trying to refresh my memory or being lazy about looking up docs. I've been asking these kinds of questions a lot more ever since I discovered that with the ChatGPT Mac app you could hit a key combo to bring up a chat window. These are questions that I could search for using a search engine, but then I would have to go through a bunch of links to find the answer. With ChatGPT, I just ask the question and get the answer.

The vision models also deserve a mention here. I've used them a couple of times to ask questions when I was too lazy to type or when I wanted to do things like convert an image of a chart to a Mermaid diagram. They can also be used to analyze dashboards or create HTML/JS from UI screenshots.

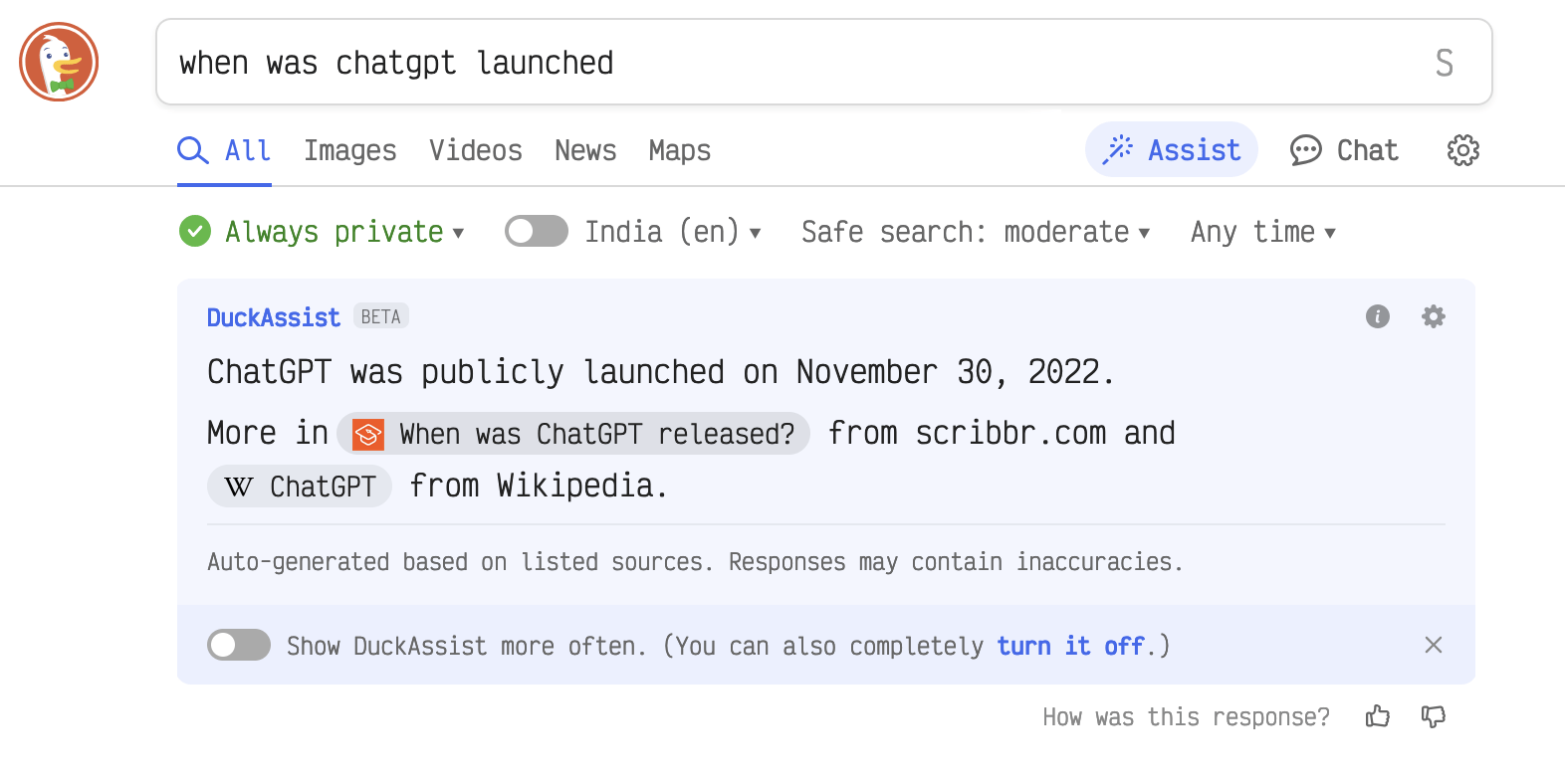

DuckAssist deserves an honorable mention here. It answers questions for in the DuckDuckGo searches you do.

Editor completions via GitHub Copilot #

Next up, one that I use quite a bit. It is even suggesting completions as I write this blog. I don't really use it when writing blogs, but I've found it to be really helpful for writing code, primarily for writing tests, documentation, or boilerplate. I use Emacs, and I access it via copilot-emacs/copilot.el and chep/copilot-chat.el, though I don't use the latter as much as I have something more specific to my use case that I built.

I got access to it early on for free, and to be frank, that is the reason I started using it. It used to kind of suck initially, and I even stopped using it at some point after the initial excitement wore off. It has gotten a lot better since. I've also heard some good things about Supermaven.

In any case, the best part about using GH Copilot in Emacs is that it will autocomplete anything that I open in Emacs. As I mentioned earlier, it autocompletes when I write blogs (though not as useful), when writing commit messages, emails, or anything else I do from Emacs.

Building prototypes via Claude #

I've found (I guess the benchmarks agree as well) that Claude, specifically 3.5 Sonnet, is a much better model for generating code than anything OpenAI has as of now (I guess o1 beats it, but I haven't used it). This, combined with artifacts, makes Claude a really powerful tool for building prototypes. I have built many useless and sometimes useful projects with this. I've even built a few relatively bigger projects where I would start with Claude and then build on top of that using aider, where it would use the same 3.5 Sonnet model. We'll talk about aider later.

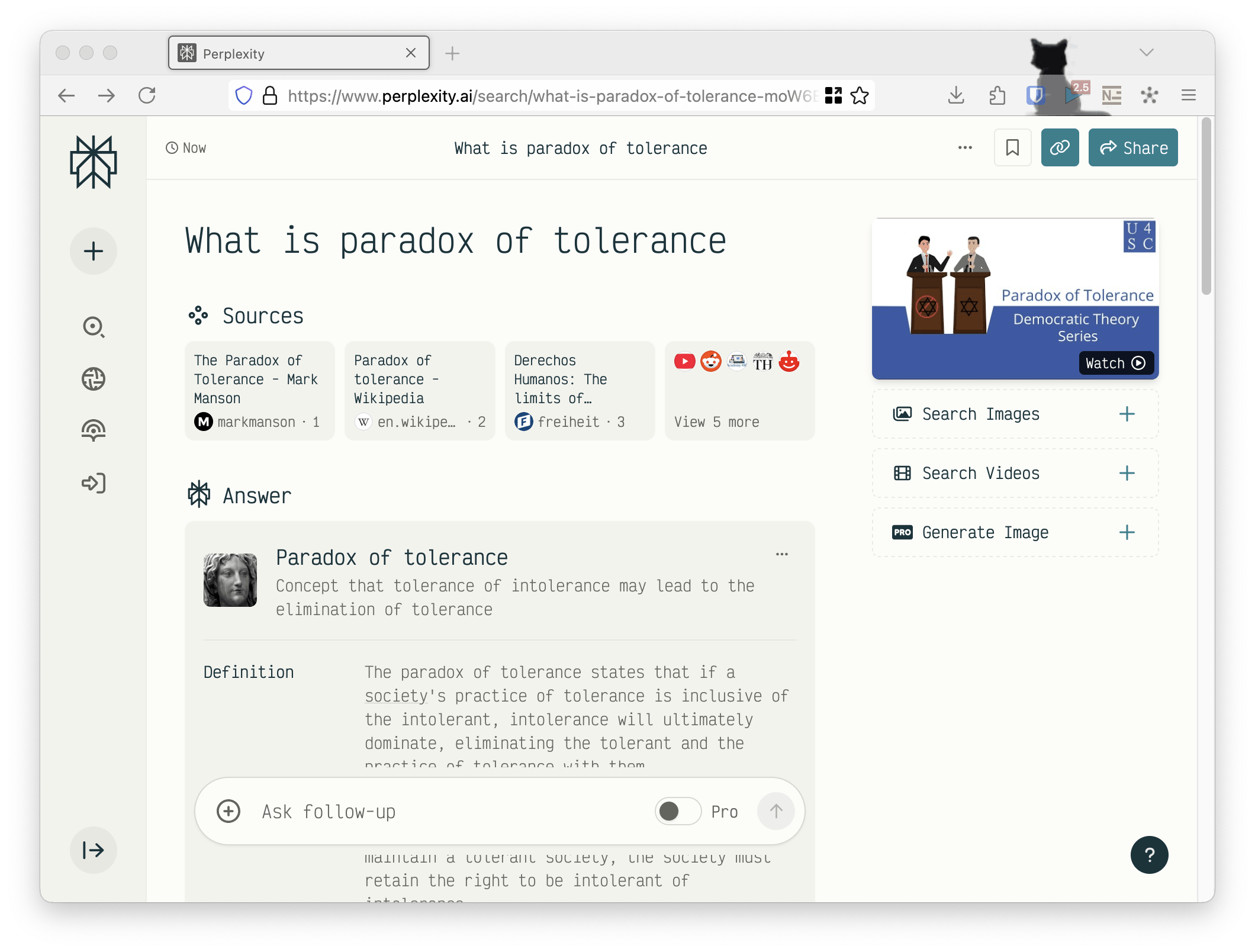

Perplexity for research #

It is no secret that old-school search engines mostly suck these days. I mean, the web in general is getting siloed and ad-ridden. I have multiple layers of tracking protection and ad blockers to have a usable web. For anyone (at least software engineers) who is still using Google, at least switch to DuckDuckGo. OK, now that the rant is over, I want to talk about Perplexity. I use it as a search engine, but mostly for non-code-related searches. For those who are not aware, Perplexity is a search engine that, when you ask a query, searches the web and uses that information to answer your question. It is like ChatGPT, but it uses search results to answer your questions.

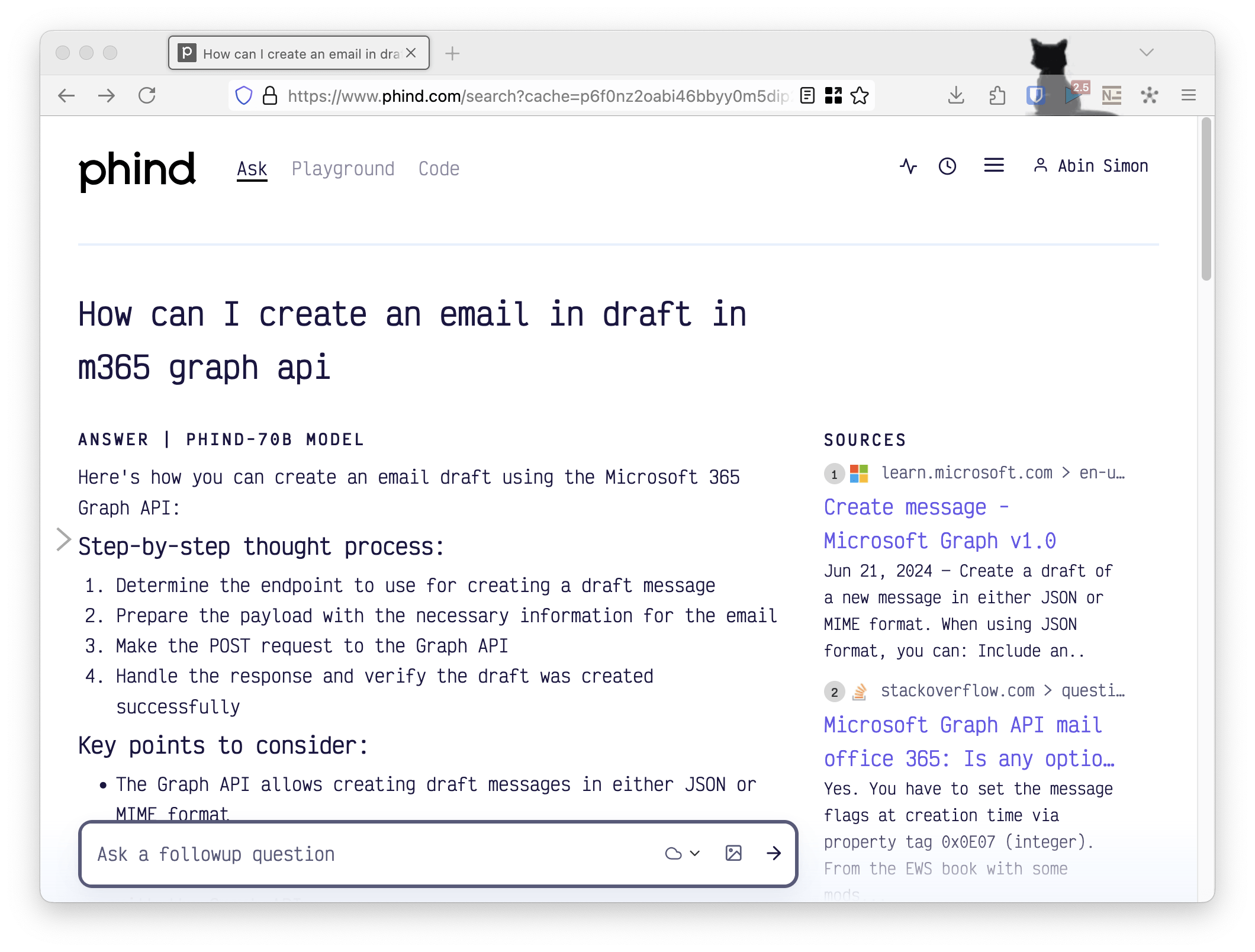

Phind for code research #

Phind is similar to Perplexity, but I've found it to be better when I have to search for code-related topics. I work a lot with Microsoft Graph, which has a relatively large API with details about edge cases only available in random forums. I've found Phind to be really helpful when I have to lookup about some edge cases or docs that I had a hard time hunting down. I can even ask it to write out a high-level implementation that would explain how to solve the problems I'm experiencing.

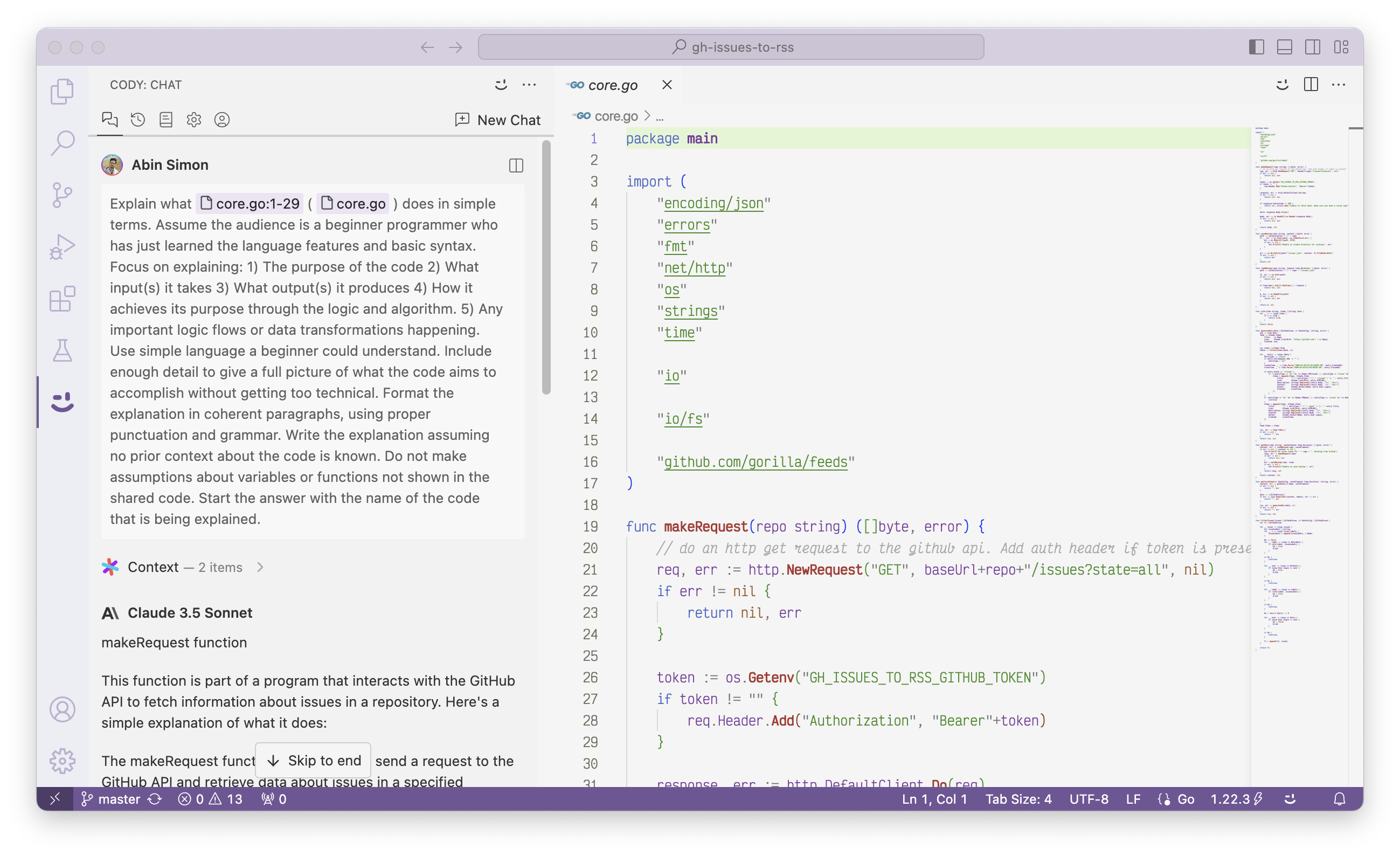

Cursor and Sourcegraph Cody for writing code #

I'm going to bucket them as one as they are kind of similar. Cursor is a fork of VSCode that implements "AI" features. Their feature list is pretty interesting. Cody is something similar but shipped as a VSCode plugin. As I mentioned above, I use Emacs, and so I don't use either of them a lot. I did force myself to use Cursor for a while just to understand its features and see how it is, and I've found it to be pretty good.

I do use Cody from time to time to ask questions about codebases. I miss Cody being a separate standalone app. Project-wide questions for a larger project are still a bit meh, and both of them get it right about 50% of the time. I've found that asking questions about a specific file or a function works a lot better, but I don't necessarily need them for that. Maybe sourcegraph/emacs-cody gets some love at some point, and I can use Cody from Emacs.

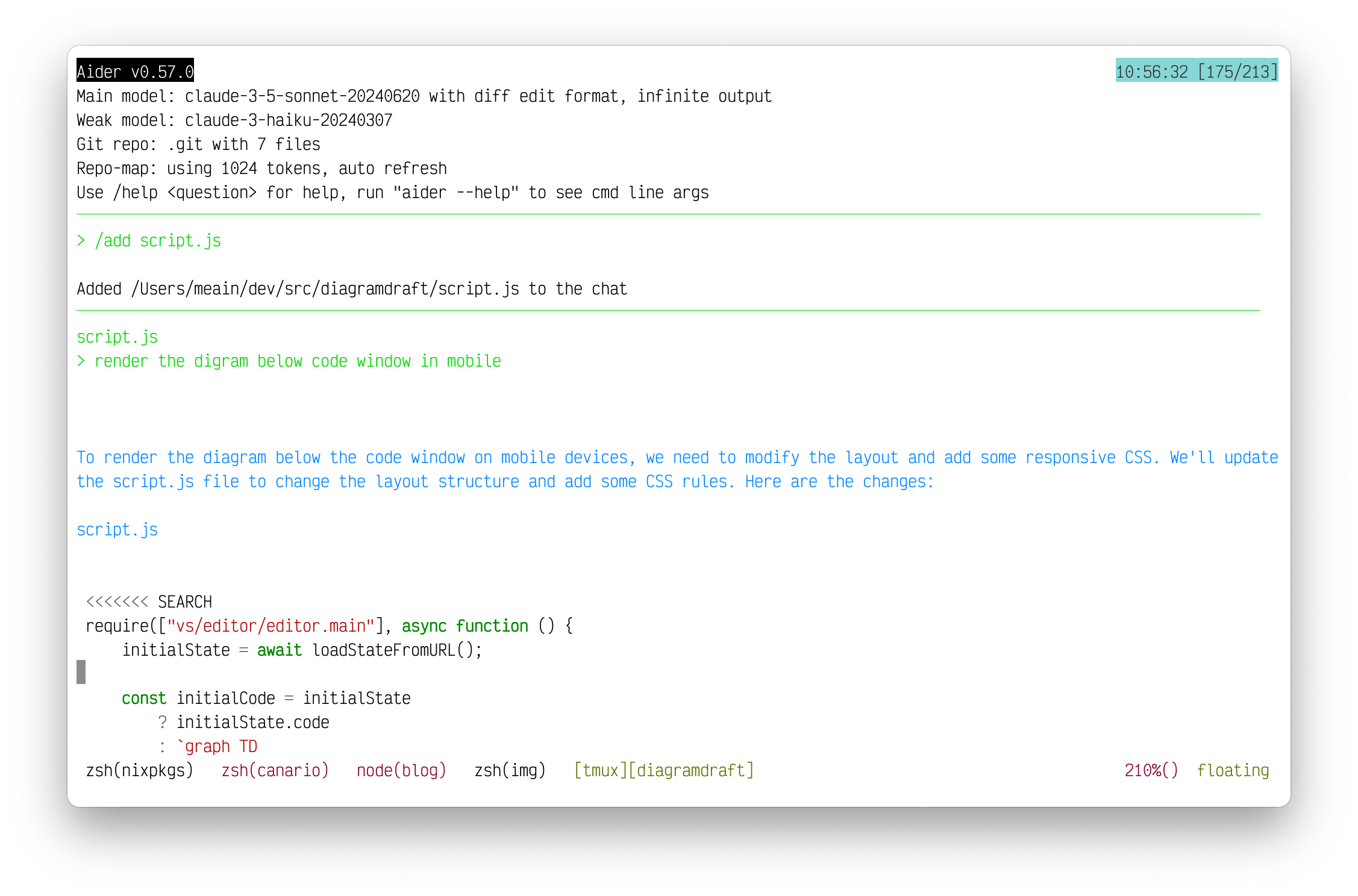

Aider for building small projects #

I enjoy writing code, but more importantly, I like fixing small annoyances using code. Aider is perfect for it. It is something that you can ask project-wide questions about or do project-wide edits. I've used it to build a couple of small projects, but it does get expensive (especially when you are not earning in USD).

DiagramDraft is one of the recent ones I built (and even named) using aider. It is a small web app that lets you create Mermaid charts online. I built this mostly because I didn't like how the "official" online Mermaid editor looked. If I had to write all the code, I might not have built it, but with Aider, I could just sit down and write English for a couple of hours and have something working.

PS: For Cursor users, you can think of Aider as similar Composer but via a terminal(or browser these days).

Notion AI for docs search #

Our org has a very good culture of having down docs for everything. One big problem when you document everything is finding it. There was a lot of attempts at categorizing and filling stuff, but Notion AI has been a game changer. I can just type in the questions that I have, and it will answer them. Even if the answer is not correct, the pages that it lists as its sources (well, not AI, but likely just vector/similarity search, but I'll take it) are usually enough to find the answer. It has a bunch of other features, but this is pretty much all I use it for.

Direct Usage #

Now we get to the more fun stuff. This is how I make use of LLMs or LLM APIs directly. I primarily use gpt-4o-mini as it is cheap enough, but at the same time, good enough for most of my use cases. If I'm working with more complex code, I tend to reach for 3.5 Sonnet and very rarely for gpt-4o. I do also run a few models on my own hardware (just your average MacBook), llama3.2 3b mostly these days, but I don't use them as much as they are not as good (I mean, it is a 3b model after all).

By the way, when I say I use them directly, I'm not saying that I'm sitting here writing curl commands in my shell, but rather talking about tools that I built or ones I actively tweak the code of and make use of the LLMs API that I pay for.

Working with code using meain/yap #

Yap is an Emacs package that I built to work with LLMs in the editor. I've sort of distilled down the operations to write to write to the current buffer (open editor), rewrite to rewrite a selection, and prompt to ask a question to the LLM. I've created templates for many of the common operations so that I can bind them to keybindings if necessary. Here are a few examples of what I use it for:

- Explain code: I have this bound to a key combination so that I can just hit it and get an explanation of the code that I'm looking at.

- Explain code with mermaid charts: Generate mermaid charts that explain the flow of a function or module.

- Generate shell command: I can run this in shell buffers to convert English to shell commands.

- Optimize code: Just a generic "make this better/faster" query. You would be shocked at how well it works, especially with the 3.5 Sonnet model.

- Fix LSP error: Send it a code block along with the error reported by the LSP and have the LLM fix it for you.

- Summarize: Your classic summarization query. You can use this both in code and non-code buffers. Since everything is in Emacs, you can use it in email or browser buffers as well.

- Writing an intro: When taking notes, especially about some unknown concept, I put an LLM-generated ELI5-like intro at the top of the note.

karthink/gptel also deserves a mention here. This provides a more chat-like interface in Emacs. I use this when I want to pick certain files/docs as context and ask questions about them.

I've used yap a lot for reviewing my blog as in fixing any spelling or grammar mistakes.

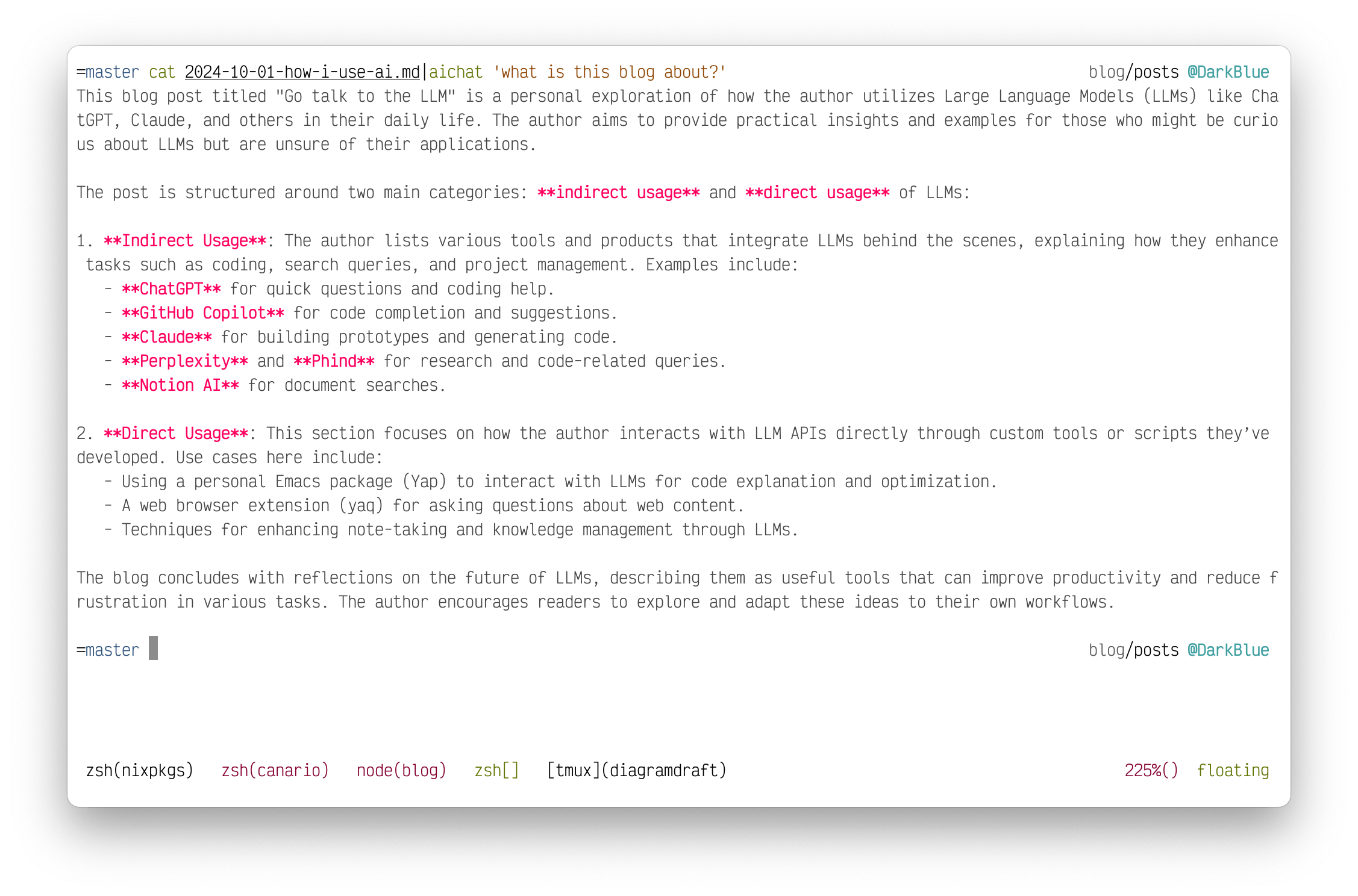

LLM in the CLI using sigoden/aichat #

I've played around with a bunch of tools for interacting with LLMs from the terminal, but I finally settled on this. For the most part, I want to send in some file content to it and ask questions about it or convert it to something else or perform operations like summarizing. ayo is an example of a script where I've written down prompts for some common tasks. I also use it for ChatGPT-like use cases where I'm asking it to explain how to do something or get an idea of new concepts.

Here is a screenshot of aichat summarizing this blog entry:

Another great use for this is to send your server logs and ask it to explain what is going on. I've found it to be really helpful when I'm debugging some issues and I have to parse through a lot of logs to understand what is happening. Just be careful to mask PII if any :D.

Asking questions about a webpage using meain/yaq #

This is another tool that I wrote. It is a browser extension that you can use to ask questions about any page. All it does is send the content of the page as context along with your questions and get the answer back. You can also select a specific piece of text to focus on it.

I initially wrote it mostly to get summaries of web pages, but it has come in handy for a lot of use cases. I've listed a couple of use cases in meain/yaq#5. I'm certain you'll find some interesting ones in there.

AI in your second brain(notes) #

I have been sucked into the whole "second brain" thing. I used to have a bunch of markdown files that I considered notes and edited using whatever editor I was using at that time. But after watching a lot of YouTube, I learned that I needed backlinks or my life was worthless. I don't know what I started with, but nowadays I use silverbulletmd/silverbullet and before this, I was using logseq/logseq. I also use Obsidian on my phone with the same set of notes because why not. All of them have some sort of AI features built-in. Being able to search/query through all my notes, which include tasks, meeting notes, etc., is pretty useful.

Bonus #

These are tools that I have not played around with lot but have found them to be interesting whenever I did.

NotebookLM for research #

NotebookLM is a tool from Google Research where you can upload a couple of documents and ask questions about them and accumulate notes around it. I've used it a handful of times to read some papers or research some topics.

Illuminate to create podcasts from research papers #

NotebookLM now has a similar feature, but this is a more dedicated tool to convert research papers into podcasts. I've listened to quite a few papers using this. It is a good way to consume papers when you are not in the mood to read.

Image and voice models #

I've used them a couple of times. Images were mostly for adding to my notes and voice was just for fun or when I wanted to build something like a TTS. These get expensive real quick, so I don't use them as much. For image models, DALLE 3 is pretty neat. OpenAI has a few good models for both image and voice. Outside of that I would even consider Midjourney for images and ElevenLabs for voice.

AI music using Suno and Udio #

They are kind of fun to play around with when you are bored. To be frank, I feel like I could listen to a playlist completely generated by these models and not hate myself in the process.

Another fun thing you could do is to use any LLM model to generate music by asking it to write Sonic Pi code. The best part about this is that you can tweak it much faster.

Meta AI in WhatsApp #

I have most of the folks whom I talk to outside of work on WhatsApp, and it is kind of useful to have an "AI" in the app that I open a lot. That said, I have only used it a couple of times to get some quick info. I've also found it to be useful for generating some silly images.

All the agnetic stuff #

I have not played around with any of these enough to form an opinion, but I've found them to be interesting. I really like the idea of gptscript-ai/gptscript.

Ollama for running local models #

This would not be complete if I didn't mention Ollama. I use Ollama to run all my local models, primarily llama3.2 3b variants, as I mentioned earlier. I also have a few embedding models that are running as well. For those who want to run something locally, Ollama is a great choice.

Besides these, there are LLMs in the form of chatbots everywhere, like the ones in AWS or Amazon or even in your bank's app. I've used them a couple of times, but it's not worth talking more about.

Conclusion #

I love how Simon Willison describes LLMs. He explains LLMs as "weird interns", as in they know a lot of stuff and are super enthusiastic to do everything, but just need some guidance. I've found that to be true. I love playing around with these, and from the looks of it, they are not going away. So far, I see them as tools that make me more productive and, more importantly, less frustrated. Hopefully, you found a few good ideas about how you could use LLMs from this blog.